Utopia ESB

The basic idea of the Enterprise Service Bus paints a wonderful picture of a harmonious coexistence, integration, and collaboration of software services. Services for a particular general cause are built or procured once and reused across the Enterprise by ways of publishing them and their capabilities in a corporate services repository from where they can be discovered. The repository holds contracts and policy that allows dynamically generating functional adapters to integrate with services. Collaboration and communication is virtualized through an intermediary layer that knows how to translate messages from and to any other service hooked into the ESB like a babel fish in the Hitchhiker’s Guide to the Galaxy. The ESB is a bus, meaning it aspires to be a smart, virtualizing, mediating, orchestrating messaging substrate permeating the Enterprise, providing uniform and mediated access anytime and anywhere throughout today’s global Enterprise. That idea is so beautiful, it rivals My Little Pony. Sadly, it’s also about as realistic. We tried regardless.

The basic idea of the Enterprise Service Bus paints a wonderful picture of a harmonious coexistence, integration, and collaboration of software services. Services for a particular general cause are built or procured once and reused across the Enterprise by ways of publishing them and their capabilities in a corporate services repository from where they can be discovered. The repository holds contracts and policy that allows dynamically generating functional adapters to integrate with services. Collaboration and communication is virtualized through an intermediary layer that knows how to translate messages from and to any other service hooked into the ESB like a babel fish in the Hitchhiker’s Guide to the Galaxy. The ESB is a bus, meaning it aspires to be a smart, virtualizing, mediating, orchestrating messaging substrate permeating the Enterprise, providing uniform and mediated access anytime and anywhere throughout today’s global Enterprise. That idea is so beautiful, it rivals My Little Pony. Sadly, it’s also about as realistic. We tried regardless.

As with many utopian ideas, before we can get to the pure ideal of an ESB, there’s some less ideal and usually fairly ugly phase involved where non-conformant services are made conformant. Until they are turned into WS-* services, any CICS transaction and SAP BAPI is fronted with a translator and as that skinning renovation takes place, there’s also some optimization around message flow, meaning messages get batched or de-batched, enriched or reduced. In that phase, there was also learning of the value and lure of the benefits of central control. SOA Governance is an interesting idea to get customers drunk on. That ultimately led to cheating on the ‘B’. When you look around and look at products proudly carrying the moniker ‘Enterprise Service Bus’ you will see hubs. In practice, the B in ESB is mostly just a lie. Some vendors sell ESB servers, some even sell ESB appliances. If you need to walk to a central place to talk to anyone, it’s a hub. Not a bus.

Yet, the bus does exist. The IP network is the bus. It turns out to suit us well on the Internet. Mind that I’m explicitly talking about “IP network” and not “Web” as I do believe that there are very many useful protocols beyond HTTP. The Web is obviously the banner example for a successful implementation of services on the IP network that does just fine without any form of centralized services other than the highly redundant domain name system.

Centralized control over services does not scale in any dimension. Intentionally creating a bottleneck through a centrally controlling committee of ESB machines, however far scaled out, is not a winning proposition in a time where every potential or actual customer carries a powerful computer in their pockets allowing to initiate ad-hoc transactions at any time and from anywhere and where we see vehicles, machines and devices increasingly spew out telemetry and accept remote control commands. Central control and policy driven governance over all services in an Enterprise also kills all agility and reduces the ability to adapt services to changing needs because governance invariably implies process and certification. Five-year plan, anyone?

If the ESB architecture ideal weren’t a failure already, the competitive pressure to adopt direct digital interaction with customers via Web and Apps, and therefore scale up not to the scale of the enterprise, but to scale up to the scale of the enterprise’s customer base will seal its collapse.

Service Orientation

While the ESB as a concept permeating the entire Enterprise is dead, the related notion of Service Orientation is thriving even though the four tenets of SOA are rarely mentioned anymore. HTTP-based services on the Web embrace explicit message passing. They mostly do so over the baseline application contract and negotiated payloads that the HTTP specification provides for. In the case of SOAP or XML-RPC, they are using abstractions on top that have their own application protocol semantics. Services are clearly understood as units of management, deployment, and versioning and that understanding is codified in most platform-as-a-service offerings.

That said, while explicit boundaries, autonomy, and contract sharing have been clearly established, the notion of policy-driven compatibility – arguably a political addition to the list to motivate WS-Policy as the time – has generally been replaced by something even more powerful: Code. JavaScript code to be more precise. Instead of trying to tell a generic client how to adapt to service settings by ways of giving it a complex document explaining what switches to turn, clients now get code that turns the switches outright. The successful alternative is to simply provide no choice. There’s one way to gain access authorization for a service, period. The “policy” is in the docs.

The REST architecture model is service oriented – and I am not meaning to imply that it is so because of any particular influence. The foundational principles were becoming common sense around the time when these terms were coined and as the notion of broadly interoperable programmable services started to gain traction in the late 1990s – the subsequent grand dissent that arose was around whether pure HTTP was sufficient to build these services, or whether the ambitious multi-protocol abstraction for WS-* would be needed. I think it’s fairly easy to declare the winner there.

Federated Autonomous Services

Windows Azure, to name a system that would surely be one to fit the kind of solution complexity that ESBs were aimed at, is a very large distributed system with a significant number of independent multi-tenant services and deployments that are spread across many data centers. In addition to the publicly exposed capabilities, there are quite a number of “invisible” services for provisioning, usage tracking and analysis, billing, diagnostics, deployment, and other purposes. Some components of these internal services integrate with external providers. Windows Azure doesn’t use an ESB. Windows Azure is a federation of autonomous services.

Windows Azure, to name a system that would surely be one to fit the kind of solution complexity that ESBs were aimed at, is a very large distributed system with a significant number of independent multi-tenant services and deployments that are spread across many data centers. In addition to the publicly exposed capabilities, there are quite a number of “invisible” services for provisioning, usage tracking and analysis, billing, diagnostics, deployment, and other purposes. Some components of these internal services integrate with external providers. Windows Azure doesn’t use an ESB. Windows Azure is a federation of autonomous services.

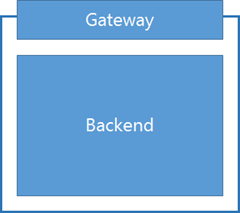

The basic shape of each of these services is effectively identical and that’s not owing, at least not to my knowledge, to any central architectural directive even though the services that shipped after the initial wave certainly took a good look at the patterns that emerged. Practically all services have a gateway whose purpose it is to handle and dispatch and sometimes preprocess incoming network requests or sessions and a backend that ultimately fulfills the requests. The services interact through public IP space, meaning that if Service Bus wants to talk to its SQL Database backend it is using a public IP address and not some private IP. The Internet is the bus. The backend and its structure is entirely a private implementation matter. It could be a single role or many roles.

Any gateway’s job is to provide network request management, which includes establishing and maintaining sessions, session security and authorization, API versioning where multiple variants of the same API are often provided in parallel, usage tracking, defense mechanisms, and diagnostics for its areas of responsibility. This functionality is specific and inherent to the service. And it’s not all HTTP. SQL database has a gateway that speaks the Tabular Data Stream protocol (TDS) over TCP, for instance, and Service Bus has a gateway that speaks AMQP and the binary proprietary Relay and Messaging protocols.

Governance and diagnostics doesn’t work by putting a man in the middle and watching the traffic coming by, which is akin to trying the tell whether a business is healthy by counting the trucks going to their warehouse. Instead we are integrating the data feeds that come out of the respective services and are generated fully knowing the internal state, and concentrate these data streams, like the billing stream, in yet other services that are also autonomous and have their own gateways. All these services interact and integrate even though they’re built by a composite team far exceeding the scale of most Enterprise’s largest projects, and while teams run on separate schedules where deployments into the overall system happen multiple times daily. It works because each service owns its gateway, is explicit about its versioning strategy, and has a very clear mandate to honor published contracts, which includes explicit regression testing. It would be unfathomable to maintain a system of this scale through a centrally governed switchboard service like an ESB.

Well, where does that leave “ESB technologies” like BizTalk Server? The answer is simply that they’re being used for what they’re commonly used for in practice. As a gateway technology. Once a service in such a federation would have to adhere to a particular industry standard for commerce, for instance if it would have to understand EDIFACT or X.12 messages sent to it, the Gateway would employ an appropriate and proven implementation and thus likely rely on BizTalk if implemented on the Microsoft stack. If a service would have to speak to an external service for which it would have to build EDI exchanges, it would likely be very cost effective to also use BizTalk as the appropriate tool for that outbound integration. Likewise, if data would have to be extracted from backend-internal message traffic for tracking purposes and BizTalk’s BAM capabilities would be a fit, it might be a reasonable component to use for that. If there’s a long running process around exchanging electronic documents, BizTalk Orchestration might be appropriate, if there’s a document exchange involving humans then SharePoint and/or Workflow would be a good candidate from the toolset.

For most services, the key gateway technology of choice is HTTP using frameworks like ASP.NET, Web API, probably paired with IIS features like application request routing and the gateway is largely stateless.

In this context, Windows Azure Service Bus is, in fact, a technology choice to implement application gateways. A Service Bus namespace thus forms a message bus for “a service” and not for “all services”. It’s as scoped to a service or a set of related services as an IIS site is usually scoped to one or a few related services. The Relay is a way to place a gateway into the cloud for services where the backend resides outside of the cloud environment and it also allows for multiple systems, e.g. branch systems, to be federated into a single gateway to be addressed from other systems and thus form a gateway of gateways. The messaging capabilities with Queues and Pub/Sub Topics provide a way for inbound traffic to be authorized and queued up on behalf of the service, with Service Bus acting as the mediator and first line of defense and where a service will never get a message from the outside world unless it explicitly fetches it from Service Bus. The service can’t be overstressed and it can’t be accessed except through sending it a message.

The next logical step on that journey is to provide federation capabilities with reliable handoff of message between services, meaning that you can safely enqueue a message within a service and then have Service Bus replicate that message (or one copy in the case of pub/sub) over to another service’s Gateway – across namespaces and across datacenters or your own sites, and using the open AMQP protocol. You can do that today with a few lines of code, but this will become inherent to the system later this year.